10x Genomics

Chromium Single Cell ATAC

Cell Ranger ATAC1.0, printed on 07/14/2025

The Cell Ranger ATAC User Interface

Cell Ranger ATAC's pipelines expose a user interface (UI) for monitoring progress through a web browser. By default, this UI is exposed at an operating-system assigned port, with a randomly-generated authentication token to restrict access. Specifying the --uiport=3600 option when using cellranger-atac will force the UI to be exposed on port 3600, and the --disable-ui option will turn off the UI.

This UI is accessible through a web interface that runs on the given port on the machine where the pipeline was started. The URL to use with the web browser is printed to the pipeline standard output and log files, and can also be found in the [sampleid]/_uiport file where the pipeline was launched.

Understanding the UI

When the pipeline runs, it will display the URL for the UI.

$ cd /home/jdoe $ cellranger-atac count --id=sample345 \ --reference=/opt/refdata-cellranger-atac-GRCh38-1.0.1 \ --fastqs=/home/jdoe/HAWT7ADXX/outs/fastq_path \ --sample=mysample Martian Runtime - 3.1.0 Serving UI at http://host.example.com:5603/?auth=mcV3MKANWfNTERRGASgYV8aXskx-rSH7hxynAdsTieA Running preflight checks (please wait)... 2018-09-17 21:33:47 [runtime] (ready) ID.sample345.SC_ATAC_COUNTER_CS.SC_ATAC_COUNTER._BASIC_SC_ATAC_COUNTER._ALIGNER.SETUP_CHUNKS 2018-09-17 21:33:47 [runtime] (run:local) ID.sample345.SC_ATAC_COUNTER_CS.SC_ATAC_COUNTER._BASIC_SC_ATAC_COUNTER._ALIGNER.SETUP_CHUNKS.fork0.chnk0.main 2018-09-17 21:33:56 [runtime] (chunks_complete) ID.sample345.SC_ATAC_COUNTER_CS.SC_ATAC_COUNTER._BASIC_SC_ATAC_COUNTER._ALIGNER.SETUP_CHUNKS ...

The UI will become unavailable once the pipeline completes unless the --noexit flag is passed.

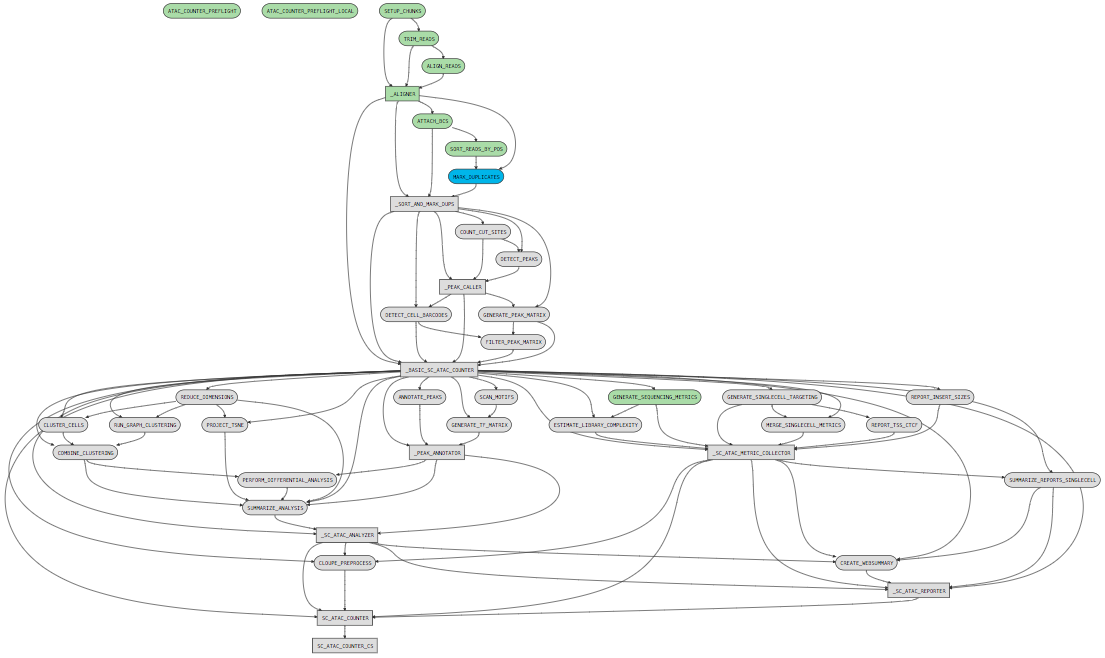

While a pipeline is running, you can open the url ( http://host.example.com:5603/?auth=mcV3MKANWfNTERRGASgYV8aXskx-rSH7hxynAdsTieA) in your web browser to view the pipeline process graph:

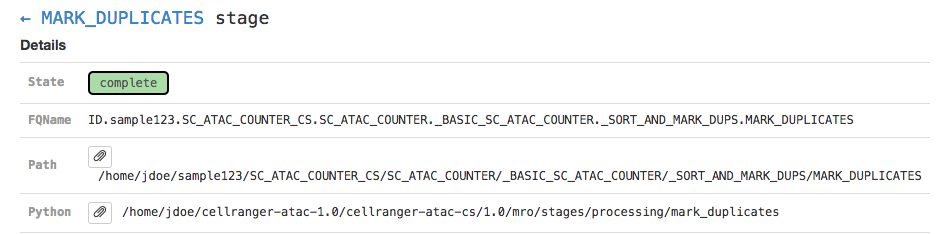

Clicking on any of the graph's nodes will reveal more information about that stage in the right pane. This info pane is broken into several sections, and the topmost shows high-level details about the stage's execution. For example, the MARK_DUPLICATES stage would show:

This includes the state of the stage (running, failed, or complete), the fully-qualified stage name (FQName), the directory in which the stage is running (Path), the stagecode that is being run (the location of the Python package being run in the above example), and any information about parameter sweeping that may apply to this stage.

Clicking the arrow next to the stage name (← MARK_DUPLICATES in the above example) will return to the pipeline-level metadata view.

The Sweeping section allows you to view the MRO call used to invoke this stage (invocation) and information about the split and join components of the stage if it was parallelized.

| Martian, the pipeline framework used by Cell Ranger ATAC, supports parameter sweeping for pipelines. The Forks and Permute fields in the Sweeping section would display information about different parameters being swept, but no Cell Ranger ATAC pipeline currently performs parameter sweeping. These fields will always contain only trivial information as a result. |

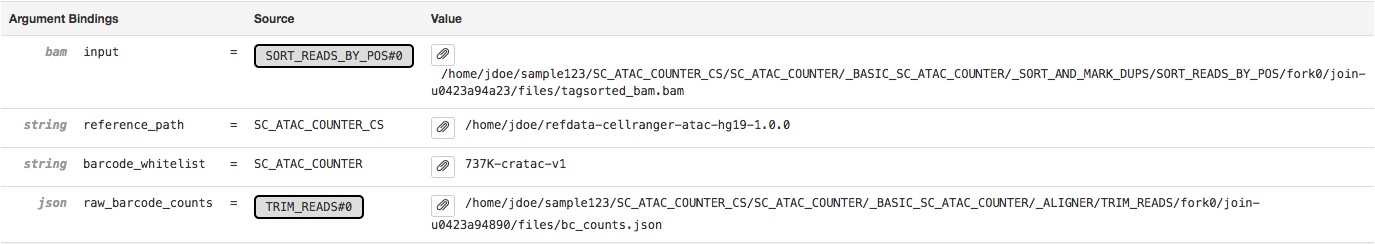

The Argument Bindings and Return Bindings sections display the inputs and outputs of the stage:

In general, only top-level pipeline stages (those represented by rectangular nodes in the process graph) contain return bindings.

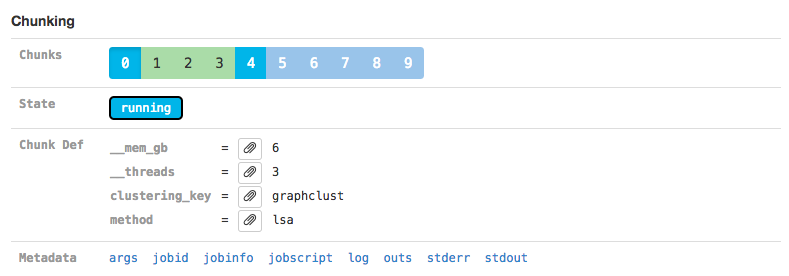

The Chunking section displays information about the parallel execution of the stage:

Many stages are automatically parallelized and process different pieces (chunks) of the same input dataset in parallel. In the above example, the input dataset (the clusterings from COMBINE_CLUSTERING stage was split into chunks, one for each kind (graph-based for the chunk 0). Chunks 1-3 already completed, 0 and 4 are in flight, and 5-9 are waiting for CPU and/or memory to become available before running.

Clicking on an individual chunk exposes additional options for viewing metadata about that chunk's execution, including any errors, standard output, and in cluster mode the job script used to queue the job to the cluster. The jobinfo includes information about how a chunk was executed, selected environment variables, and for completed chunks various perfomance statistics such as peak memory usage and CPU time used. As with the Sweeping section, additional metadata associated with the chunk execution can also be viewed.

Launching the UI for Existing Pipelines

You can also examine pipestances that have already completed using the Cell Ranger ATAC UI. Assuming your pipestance output directory was /home/jdoe/sample345, simply re-run the cellranger-atac command with the --noexit option to re-attach the UI:

$ cd /home/jdoe $ cellranger-atac count --id=sample345 \ --reference=/opt/refdata-cellranger-atac-GRCh38-1.0.1 \ --fastqs=/home/jdoe/HAWT7ADXX/outs/fastq_path \ --sample=mysample --noexit Serving UI at http://host.example.com:3600 2012-01-01 12:00:00 [runtime] Reattaching in local mode. Running preflight checks (please wait)... Pipestance completed successfully, staying alive because --noexit given.

Because cellranger-atac assumes it is resuming an incomplete pipeline job when re-attaching, the pipeline must be valid and preflight checks must still be passed. As such, relocating a complete pipeline may prevent the UI from re-attaching.

Accessing the UI Through a Firewall

If you run pipelines on a server that blocks access to all ports except SSH, you can still access the Cell Ranger ATAC UI using SSH forwarding. Assuming you have a cellranger-atac pipeline running on port 3600 on cluster.university.edu, you can type the following from your laptop:

$ ssh -NT -L 9000:cluster.university.edu:3600 jdoe@cluster.university.edu jdoe@cluster.university.edu's password:

Upon entering your password (assuming you are jdoe@cluster.university.edu), the command will appear to hang. However, in the background it has mapped port 9000 on your laptop to port 3600 on cluster.university.edu through the ssh connection for which you just entered your password.

This allows you to go to http://localhost:9000/ in your web browser and access the UI running on cluster.university.edu:3600. Once you are done examining the UI, use Ctrl+C in your ssh -NT -L ... terminal window to terminate this SSH forwarder.

A full explanation of SSH forwarding is beyond the scope of this guide, but the OpenSSH Documentation explains this in greater depth.

- 2.1 (latest)

- 2.0

- 1.2

- 1.1

- Cell Ranger ATAC v1.0